Appendix B: Steering Committee Guidelines

Appendix B: steering committee Guidelines

December 18, 2008

Provisional Approval: September 16, 2009

Introduction

The steering committee guidelines that follow are framed, in part, by the Issues and Recommendations paper prepared by Sharif Shakrani (Michigan State University) and Greg Pearson (National Academy of Engineering) for the National Assessment Governing Board. The guidelines were developed over a two-day period (December 17-18, 2008) by the steering committee for the 2012 NAEP Technological Literacy Assessment Project. The steering committee presented these guidelines to the planning committee in a joint session on December 18, 2008. The guidelines were revised and reorganized according to decisions made during the steering committee's meeting of March 11-12, 2009, and again shared with the planning committee. In addition, the steering committee sought input from leaders in education, industry, business, engineering, research, and allied organizations (including the International Society for Technology in Education [ISTE], the International Technology and Engineering Educators Association (ITEEA), and the Partnership for 21st Century Skills); feedback resulting from these reviews served to further refine the guidelines.

Suggested Definition for Technological Literacy

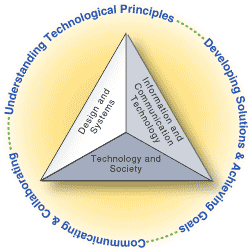

The steering committee has identified various elements that illustrate the knowledge, ways of thinking and acting, and capabilities that define technological literacy. Technological literacy, as viewed by the steering committee, is the capacity to use, understand, and evaluate technology as well as to understand technological principles and strategies needed to develop solutions and achieve goals. It encompasses the three areas of Technology and Society, Design and Systems, and Information and Communication Technology.

Recommended Grade Level to Be Assessed

The National Assessment Governing Board requested that the two committees recommend to the Board at what grade(s) the national probe should be conducted in 2012. Hence, the steering and planning committees of the NAEP Technological Literacy Framework Development Project, in a joint session on March 11, 2009, discussed the matter. On the basis of this discussion, the two committees recommend to the Governing Board that the proposed 2012 NAEP Technological Literacy probe be administered at grade 8. If funding is available for an additional probe, the committees recommend that it be given at grade 12. The lowest priority would be for a probe at grade 4. The rationale for choosing grade 8 included:

- Students are cognitively mature and are more likely to have taken a technology-related course or curriculum units.

- This is the last grade before student dropout rates increase.

- Grade 8 is aligned with the Trends in International Mathematics and Science Study (TIMSS) and the Programme for International Student Assessment (PISA) assessments, allowing for more data analysis.

- Differences in performance by gender are less pronounced than at grade 12.

- There is more opportunity to measure impact from schooling than at grade 4.

- Students may be better equipped to take a computer-based test than at grade 4.

- Grade 8 is specifically targeted in No Child Left Behind, which requires that every student is technologically literate by the time the student finishes the eighth grade.

Guiding Principles

The assessment shall consist of technological content areas making up the scale scores to be reported and technological practices that characterize the field.

1. There are two content areas that must be assessed and where the data must be reported out as subscales:

- Design and Systems

- Information and Communication Technology (ICT)

If possible, the following area should be explored as a third content area of focus:

- Technology and Society

See the addendum for examples of targets to be included in the three content areas.

2. Suggested technological practices are listed below:

- Understanding Technological Principles

- Developing Solutions and Achieving Goals

- Communicating and Collaborating

The relationships of these practices to the content areas and to each other are reflected in figure B-1.

3. Content and context for the assessment should be informed by existing state standards and assessments, national (for examle, International Society for Technology in Education [ISTE], International Technology and Engineering Educators Association [ITEEA] and international standards, and research (National Academy of Engineering, National Research Council). Examples from industry, federal agencies responsible for carrying out STEM-based research (NASA), National Oceanic and Atmospheric Administration [NOAA], Department of Agriculture, National Science Foundation [NSF], Department of Energy, Department of Transportation, etc), and science and technology museums might also be collected for building assessment ideas.

4. The assessment should have tasks that are applied to real-world contexts and should be scaffolded to ensure that reliance on students' prior knowledge of specific technology systems will be minimized. The focus should be on concepts and not on specific vocational skills or technologies.

5. "Life situations" and local and contemporaneous conditions should be used as a way to confer relevance to each grade level. Content and context of the assessment items should have relevance and meaning to the learner. If possible, options should be generated in the assessment representing different situations to remove bias due to the background of the student.

6. The planning committee must develop examples of items and tasks that measure only knowledge and practices that are assessable. The examples should not be limited, to multiple choice, but should illustrate, if possible, different styles and technological tools.

7. Considerable work has already been put into the development of state standards for technology, ICT fluency, and engineering. These existing state standards and assessments should inform the development of the NAEP framework, but should not limit the framework. The planning committee should consider differences in how technological literacy is defined and treated in state standards, whether as discrete standards or as part of core content standards, e.g. mathematics, science, and language arts. Attention should also be paid to the confusion around Educational Technology versus Technological Education. There are numerous studies that specify the nature of technological literacy within the standards of various states. States each have their own definition of technological literacy, which may vary from the definition used in the NAEP framework.

8. To avoid the potential problem of obsolescence, the 2012 Technological Literacy Assessment should focus on a broad base of knowledge and skills, not on specific technologies that may change. For example, specific communication technologies in use today (Internet-connected multimedia, smartphones and PDAs) would not have been familiar to students a decade ago, and will most likely be obsolete a decade from now.

9. The assessment should use innovative computer-based assessment strategies that are informed by research on learning and are related to the assessment target (for example, problem solving). These strategies should also reflect existing state computer-based assessments and the computer-based assessment aspects of the 2009 NAEP Science Framework. To effectively integrate innovative computer-based assessment strategies in the NAEP Technological Literacy Framework, the planning committee and/or assessment developers need to know the affordances and constraints of various technologies, how particular ones could support assessment goals, and how to use them. Specific examples of tools available for assessment tasks include probeware, presentation software, authoring tools, electronic whiteboards, drawing software, and typing tutors.

10. Computer-based assessment strategies should be informed by what is known about all learners, including those with special needs (English language learners and students with disabilities). These strategies should also be informed by the ways students currently use computers to access, process, and utilize information (Web 2.0, social networking, etc.). Effective assessment design incorporates current understandings of how people learn, how experts organize information, and the skills of effective learners. Development of the framework and item specifications should draw on expertise in engineering and technology to suggest types of problems that might be adapted to computer-based testing. The kinds of technological tools scientists and engineers use, such as simulations and visualizations, should be considered for use in the technological literacy assessment.

Addendum

The steering committee formulated the following list of targets they thought should be included in each of the content areas. Although they consider these sets of targets to be necessary, they agree that the specified targets may not be sufficient. In addition, the committee recognized that guidelines are normally "big ideas" and that this list contains detail in some areas and not in others. In the areas where detail appears, the committee considers these details sufficiently important to enumerate them for the planning committee's consideration on individual targets.

Steering committee formulated the following list of targets.

Major Assessment Areas |

Targets |

|---|---|

Technology and Society |

|

Design and Systems |

|

Information and |

|

* These Guidelines reflect the recommendations of the steering committee as of September, 2009, and do not include the change in title and year of the assessment approved by the National Assessment Governing Board in March, 2010.